Rethinking Data and Analytics in the Age of GenAI

Structuring your data and APIs to unlock the potential of GenAI

We are at the cusp of a tectonic shift - the combination of GenAI and data will unleash analytical capabilities and business insights never seen before. GenAI will forever change the way we interact with data. However these changes need us to rethink the way we collect, organize and store data. The bottlenecks of current data architecture will be amplified due to the demand for GenAI applications. In this article, I will talk about the key changes we will need to prepare data for more efficient GenAI applications through three emerging themes.

#1: Transition from SQL to Natural Language Queries

Today’s databases, data warehouses and data lakes are designed for data engineers. They support interaction via query languages such as SQL, GraphQL and MQL. These query languages are hard to learn for non technical users. GenAI is rapidly changing that and one of the most compelling promises of GenAI is enabling business users to directly query data using natural language.

When someone writes their queries in SQL, they specify the tables they want to use, how data between different tables should be connected, which columns should be used as predicates and specify how the result should be aggregated or summarized.

A natural language query however is open ended. When a large language model interprets the natural language query, it has to infer details about tables, predicates and aggregations from semantics of the natural language query. This is where good data design is important because a clear, unambiguous design will make the work of LLM a lot simpler and drastically reduce hallucinations. The following ideas will be helpful for this purpose:

Adopt a consistent and semantic naming standard for your tables and columns. Suppose a manager in a running shoe company asks in natural language “which city’s sales were lower than x for our cold weather product line”. This would result in a query on the Sales table with additional predicates involving the sales_amount column and product_category column. If the sales data is in a table named Order, and there is a table named Sales but it has the name of all the salespeople in the company instead of sales data, the LLM, no matter how large and smart it is won’t be able to generate the best query. Data dictionaries, metadata and just general “documentation” have been recommended best practices to follow since the early days of the database, but for GenAI these practices will make or break the application.

Consider vector indexing of rows. This may sound counter-intuitive to a data architect. If you think about it though, the main reason for column index in traditional databases is query performance. However if we vectorize every row and store it, you are able to create a signature of the entire row, kind of like an information hash. This will provide a powerful way for LLM query interpreters to pick candidate rows to answer a query. For examples, if a stock market analyst queries “show me all the stocks in oil sector that have a buy rating from goldman sachs analyst”, it would be much easier to instantly pick all the rows matching goldman analyst, buy, and oil sector through a vector similarity search as opposed to searching using a typical query such as analyst_affiliation = ‘GS’ and sector = ‘oil’ and rating = ‘buy’ which even if properly interpreted by the LLM may not have all the indices on those columns to perform well. In this case you can completely bypass SQL! Moreover, indexing the rows will also help the LLM match a query to the correct column. For example, if someone mentions ‘GS’ in their query, the LLM can use the information that GS exists in the analyst_affiliation column to create the appropriate SQL.

Simplify your schema. Whether to normalize or not has been a debate for as long as databases have existed. Sometimes normalization principles are relaxed to address reporting and analytics use cases. This becomes very important for SQL generation from natural language. If you have been following the benchmark results, you will notice that the fewer the joins, the better is the performance of LLM. This doesn’t mean there is a need to always denormalize, but as long as the joins make sense semantically i.e. when you say it out in plain English and it holds up, the LLM would be able to translate those queries into appropriate joins. Not surprisingly enough, good schema design which has sensible one-to-one, one-to-many, many-to-one relationships is key to getting good results.

As I was writing this article, Databricks made an announcement about their new data intelligence platform and I am very glad to see that they are making some of these ideas possible.

#2: Transition from Data Lake to Intelligent Knowledge Repository

Data Lakes have been instrumental in bringing together organizational data from disparate sources. The key advantage they provide is access to all kinds of data and datasets for application developers to build out analytics and machine learning solutions. Data Lakes when combined with GenAI have the potential to instantly become a knowledge repository for anyone in the organization to get insights by asking questions. Inviting users to ask questions in natural language sets up unrealistic expectations. Unlike the developers building analytics solutions, a user typing in queries doesn’t know what is available and what is not in the data repository. So, to make the user experience good, the solution needs to have comprehensive data, linkages between its data, as well links to external data in the catalog.

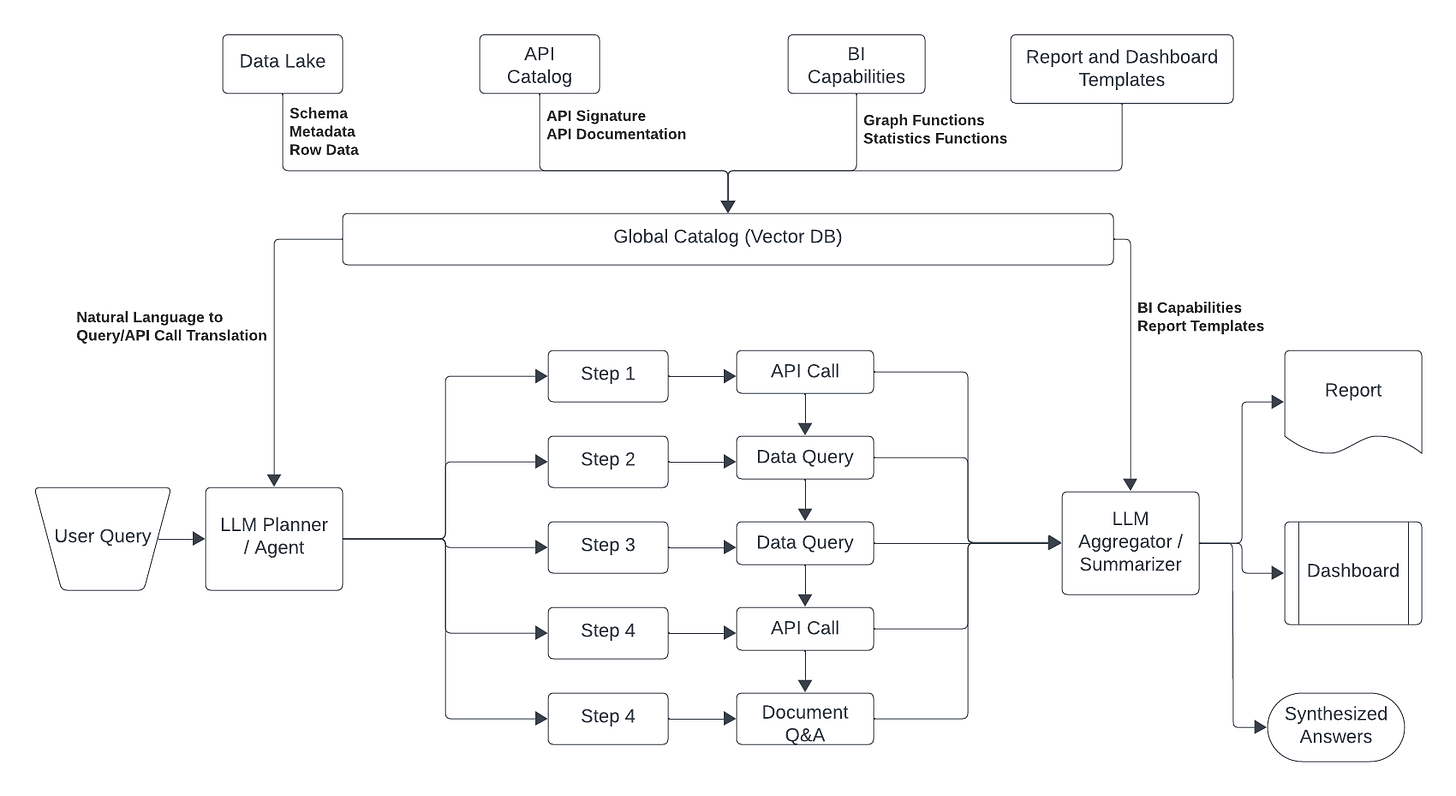

Add data API to catalog. Oftentimes, the structured data in the data lake may not have the complete picture. For example if a user asks a question “which is the most populated city where our product has grown in sales in only single digits”, the data lake may have all the relevant sales data but not know anything about population of cities. If a developer was writing a query for this, they would know that they can make an API call to the city demographics api (assuming the company has access to such an API). However, for a user asking away natural language questions, this will result in a deadend. The problem can be solved by adding the entire catalog of APIs to the catalog available to LLM. This will provide the LLM planner/agent a way to break down the query into steps (a.k.a step-by-step reasoning) and know how to access the data for each step.

Link, tag and organize data lake data. Data lakes bring all types of data into a single platform - structured, text, documents, videos, images, time series and so on. However if there’s no clear link between the data via an entity, or the order of occurrence of the data is unknown or duplicate sounding data from multiple business functions are present without appropriate tagging, the LLMs will not be able to disambiguate and will have incomplete data to work with. Consider this query “Tell me how Google's ad business did during the quarters their stock price change stayed under 5%”. The data lake may have all the 10-K filings of Google for the last ten years where ad revenue data is available and a table with Google’s daily stock price data. However if the 10-K documents are not tagged with company key and date and are not linked to the structured price table, there is no way for the LLM planner/agent to traverse this connection and create a comprehensive answer. Another important consideration is to tag the data in the lake by business functions such as sales, marketing, product etc. Oftentimes, there will be duplicate data across these sections e.g. customer information, and this segmentation will offer the LLM to use data from the correct functional area by interpreting the functional area from the query.

OpenAI’s recent announcement about Assistants API is a very early prototype of how the future data lake would look like. Imagine if this assistant could work at scale across thousands of documents, API calls and data tables. Today’s databricks announcement is a step in that direction.

#3: Transition from Business Intelligence and Data Analytics to Intelligent Agents.

This is where it all comes together. #1 and #2 are the building blocks to get to this step. While a one off natural language query can be answered by a couple of SQLs or a function call or two, a report or analytics dashboard involves strategic data retrieval from multiple sources followed by aggregation and analysis. An analytics dashboard or report is like a story written up for a particular audience. I see intelligent agents emerging here as the solution to replace hours of developer/business analyst work on dashboard creation. In #1 and #2, the user prompts were short and pointed. For #3 the user prompts will be descriptive - this will be like the business requirements for the reporting team succinctly described in a series of steps. For example, a prompt could be:

“Find all customers who logged in fewer than five times last month, then find the times when marketing emails were sent to them and then show me how quickly customers responded to marketing emails. Take all the emails to which the customers responded the fastest and show me the topics and products in those emails. Make this report using the weekly status template.”

The LLM agent in response to this query will retrieve data from email notifications table, customer activity table, get relevant emails from the data lake (via API if needed) and invoke a call to clustering and topic modeling APIs with the relevant emails.

The preparation steps (along with everything else from #1 and #2) for such solutions would be:

Add BI and analytic API calls to your catalog.

Add reporting templates to the catalog.

Create a prompt repository for recurring reports/dashboard.

Out of the three things discussed, this one is the most forward looking and I haven’t seen robust solutions in this space yet. Given the rapid pace of innovation and investment, I would expect these type of solutions to arrive within a year. This is a very hard problem and it is easier said than done. One should carefully test and evaluate the accuracy of such systems, and not fall for the marketing promises without due diligence.

The picture below shows a simplified and conceptual end-to-end architecture.

#4 Data Governance

One of the most tricky issues here will be how to ensure access control is always applied and confidential data does not get inadvertently exposed. Given the highly aggregated and summarized nature of the results, applying ACL post query or at a broad level would very likely lead to data leakage. Having ACL at the most granular level possible is the solution. Row level, column level, table level, document level, api level — apply ACL at the finest level possible.

#5 Fine Tuning

Although today’s LLMs are highly capable and general purpose in nature, it would still be a challenge to expect an out-of-the-box LLM work for your data without any effort from your end. It may be able to get you 80% there, but be prepared for fine tuning the models, prompt tuning, standardization of prompts to get consistently good performance. This will be a heavy lift and will need collaboration between business stakeholders, data owners, data engineers and LLM Engineers.